Ethereum co-founder Vitalik Buterin is calling for social media platforms to use cryptography and blockchain tools to make their content-ranking systems more transparent and verifiable.

In a Monday X post, Buterin argued that X should use zero-knowledge proofs (ZK-proofs) and blockchain to prove the fairness of the algorithm that determines the reach of content on the platform. He raised an issue with how X operates on Dec. 9, claiming that its owner Elon Musk’s way of leading it is harmful:

“Elon Musk I think you should consider that making X a global totem pole for free speech, and then turning it into a death star laser for coordinated hate sessions, is actually harmful for the cause of free speech.“

Ethereum Foundation AI lead Davide Crapis reacted to this initial idea by saying, “If you want to claim X is the platform for free speech, you should disclose your algorithm optimization targets.” He added that “it should be legible to the users, and tweakable.”

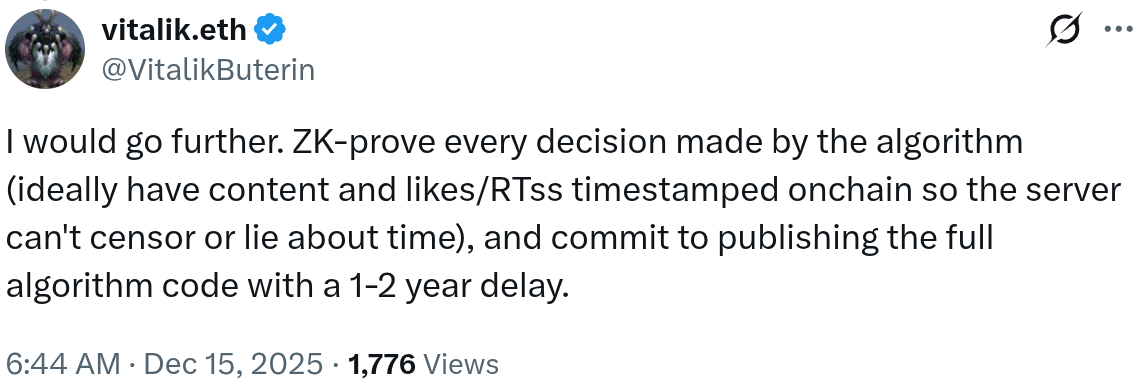

Buterin suggested a verifiable system that employs ZK-proofs for every decision made by the algorithm and timestamps all content, likes, and retweets on a blockchain “so the server can’t censor or lie about time.” The platform should also “commit to publishing the full algorithm code with a 1-2 year delay.”

ZK-proofs are a cryptographic way to prove that something is true without revealing the underlying data — for instance, proving that you are over 18 without sharing your full name. Buterin did not go into detail about what proofs would demonstrate in his suggested solution, but they would likely show that algorithmic decisions followed certain constraints without sharing sensitive details.

Related: Sunlight is more effective than censorship

Crypto takes on social media

Buterin’s post echoed the sentiment behind some decentralized social media platforms known as SocialFi. Such initiatives, despite none having reached mainstream adoption, appear to be taken seriously by their traditional centralized equivalents.

In early 2025, Meta, the parent company of Facebook and Instagram, blocked links to a decentralized Instagram competitor called Pixelfed. All links to the platform were labeled as “spam” and removed immediately. Others claimed that Facebook competitors, including Mastodon, were given the same treatment.

The crypto community — with its tendency to be wary of centralized control — has raised concerns about the potential impact of decisions made by social media platform leadership in the past. When Musk announced in early January that X would prioritize promoting content deemed informative or educational over other types of content, many were not convinced.

Critics questioned who would decide what qualifies and whether the policy could become a vehicle for suppressing certain viewpoints. Musk has also been accused by critics of limiting access to premium features for users who disagreed with him.

Buterin also chimed in at the time. He urged Musk to remain committed to free speech on the platform and not ban users for disagreements or expressing views.

Related: Buterin says X’s new location feature ‘risky’ as crypto users flag privacy concerns

The impact of social media on society

Research has long shown that social media has an outsized impact on society and the functioning of democratic processes. A paper published in 2024 suggested that “access to Facebook may increase belief in misinformation.”

Reuters also reported last month that recent court filings suggested that Meta shut down internal research into the mental health effects of Facebook after finding causal evidence that its products harmed users’ mental health. The study found that “people who stopped using Facebook for a week reported lower feelings of depression, anxiety, loneliness, and social comparison.”

The European Union attempted to tackle the issue with its Digital Services Act, which requires transparency on the main algorithm parameters and requires platforms to assess the risk and disclose the findings on the potential negative impact of their operations. Impacts considered explicitly include “negative effects on civic discourse and electoral processes, and public security.”

The Digital Services Act also requires that vetted researchers be given access to platform data to study their systemic risk independently. A lack of compliance by X with this specific requirement is among the reasons cited by the European Commission for imposing a 120 million euro fine earlier this month.

Other reasons include a lack of transparency on X’s ads repository and the platform’s blue checkmark purportedly deceiving users since “anyone can pay to obtain the ‘verified’ status without the company meaningfully verifying who is behind the account.”

cointelegraph.com

cointelegraph.com